In January, the emergence of DeepSeek’s R1 artificial intelligence program prompted a stock market selloff. Seven weeks later, chip giant Nvidia, the dominant force in AI processing, seeks to place itself squarely in the middle of the dramatic economics of cheaper AI that DeepSeek represents.

On Tuesday, at the SAP Center in San Jose, Calif., Nvidia co-founder and CEO Jensen Huang discussed how the company’s Blackwell chips can dramatically accelerate DeepSeek R1.

Also: Google claims Gemma 3 reaches 98% of DeepSeek’s accuracy – using only one GPU

Nvidia claims that its GPU chips can process 30 times the throughput that DeepSeek R1 would normally have in a data center, measured by the number of tokens per second, using new open-source software called Nvidia Dynamo.

“Dynamo can capture that benefit and deliver 30 times more performance in the same number of GPUs in the same architecture for reasoning models like DeepSeek,” said Ian Buck, Nvidia’s head of hyperscale and high-performance computing, in a media briefing before Huang’s keynote at the company’s GTC conference.

The Dynamo software, available today on GitHub, distributes inference work across as many as 1,000 Nvidia GPU chips. More work can be accomplished per second of machine time by breaking up the work to run in parallel.

The result: For an inference task priced at $1 per million tokens, more of the tokens can be run each second, boosting revenue per second for services providing the GPUs.

Buck said service providers can then decide to run more customer queries on DeepSeek or devote more processing to a single user to charge more for a “premium” service.

Premium services

“AI factories can offer a higher premium service at premium dollar per million tokens,” said Buck, “and also increase the total token volume of their whole factory.” The term “AI factory” is Nvidia’s coinage for large-scale services that run a heavy volume of AI work using the company’s chips, software, and rack-based equipment.

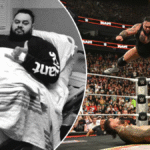

Nvidia DGX Spark and DGX Station.

Nvidia

The prospect of using more chips to increase throughput (and therefore business) for AI inference is Nvidia’s answer to investor concerns that less computing would be used overall because DeepSeek can cut the amount of processing needed for each query.

By using Dynamo with Blackwell, the current model of Nvidia’s flagship AI GPU, the Dynamo software can make such AI data centers produce 50 times as much revenue as with the older model, Hopper, said Buck.

Also: Deepseek’s AI model proves easy to jailbreak – and worse

Nvidia has posted its own tweaked version of DeepSeek R1 on HuggingFace. The Nvidia version reduces the number of bits used by R1 to manipulate variables to what’s known as “FP4,” or floating-point four bits, which is a fraction of the computing needed for the standard floating-point 32 or B-float 16.

“It increases the performance from Hopper to Blackwell substantially,” said Buck. “We did that without any meaningful changes or reductions or loss of the accuracy model. It’s still the great model that produces the smart reasoning tokens.”

In addition to Dynamo, Huang unveiled the newest version of Blackwell, “Ultra,” following on the first model that was unveiled at last year’s show. The new version enhances various aspects of the existing Blackwell 200, such as increasing DRAM memory from 192GB of HBM3e high-bandwidth memory to as much as 288GB.

Also: Nvidia CEO Jensen Huang unveils next-gen ‘Blackwell’ chip family at GTC

When combined with Nvidia’s Grace CPU chip, a total of 72 Blackwell Ultras can be assembled in the company’s NVL72 rack-based computer. The system will increase the inference performance running at FP4 by 50% over the existing NVL72 based on the Grace-Blackwell 200 chips.

Other announcements made at GTC

The tiny personal computer for AI developers, unveiled at CES in January as Project Digits, has received its formal branding as DGX Spark. The computer uses a version of the Grace-Blackwell combo called GB10. Nvidia is taking reservations for the Spark starting today.

A new version of the DGX “Station” desktop computer, first introduced in 2017, was unveiled. The new model uses the Grace-Blackwell Ultra and will come with 784 gigabytes of DRAM. That’s a big change from the original DGX Station, which relied on Intel CPUs as the main host processor. The computer will be manufactured by Asus, BOXX, Dell, HP, Lambda, and Supermicro, and will be available “later this year.”

Also: Why Mark Zuckerberg wants to redefine open source so badly

Huang talked about an adaptation of Meta’s open-source Llama large language models, called Llama Nemotron, with capabilities for “reasoning;” that is, for producing a string of output itemizing the steps to a conclusion. Nvidia claims the Nemotron models “optimize inference speed by 5x compared with other leading open reasoning models.” Developers can access the models on HuggingFace.

Improved network switches

As widely expected, Nvidia has offered for the first time a version of its “Spectrum-X” network switch that puts the fiber-optic transceiver inside the same package as the switch chip rather than using standard external transceivers. Nvidia says the switches, which come with port speeds of 200- or 800GB/sec, improve on its existing switches with “3.5 times more power efficiency, 63 times greater signal integrity, 10 times better network resiliency at scale, and 1.3 times faster deployment.” The switches were developed with Taiwan Semiconductor Manufacturing, laser makers Coherent and Lumentum, fiber maker Corning, and contract assembler Foxconn.

Nvidia is building a quantum computing research facility in Boston that will integrate leading quantum hardware with AI supercomputers in partnerships with Quantinuum, Quantum Machines, and QuEra. The facility will give Nvidia’s partners access to the Grace-Blackwell NVL72 racks.

Oracle is making Nvidia’s “NIM” microservices software “natively available” in the management console of Oracle’s OCI computing service for its cloud customers.

Huang announced new partners integrating the company’s Omniverse software for virtual product design collaboration, including Accenture, Ansys, Cadence Design Systems, Databricks, Dematic, Hexagon, Omron, SAP, Schneider Electric With ETAP, and Siemens.

Nvidia unveiled Mega, a software design “blueprint” that plugs into Nvidia’s Cosmos software for robot simulation, training, and testing. Among early clients, Schaeffler and Accenture are using Meta to test fleets of robotic hands for materials handling tasks.

General Motors is now working with Nvidia on “next-generation vehicles, factories, and robots” using Omniverse and Cosmos.

Updated graphics cards

Nvidia updated its RTX graphics card line. The RTX Pro 6000 Blackwell Workstation Edition provides 96GB of DRAM and can speed up engineering tasks such as simulations in Ansys software by 20%. A second version, Pro 6000 Server, is meant to run in data center racks. A third version updates RTX in laptops.

Also: AI chatbots can be hijacked to steal Chrome passwords – new research exposes flaw

Continuing the focus on “foundation models” for robotics, which Huang first discussed at CES when unveiling Cosmos, he revealed on Tuesday a foundation model for humanoid robots called Nvidia Isaac GROOT N1. The GROOT models are pre-trained by Nvidia to achieve “System 1” and “System 2” thinking, a reference to the book Thinking Fast and Slow by cognitive scientist Daniel Kahneman. The software can be downloaded from HuggingFace and GitHub.

Medical devices giant GE is among the first parties to use the Isaac for Healthcare version of Nvidia Isaac. The software provides a simulated medical environment that can be used to train medical robots. Applications could include operating X-ray and ultrasound tests in parts of the world that lack qualified technicians for these tasks.

Nvidia updated its Nvidia Earth technology for weather forecasting with a new version, Omniverse Blueprint for Earth-2. It includes “reference workflows” to help companies prototype weather prediction services, GPU acceleration libraries, “a physics-AI framework, development tools, and microservices.”

Also: The best AI for coding (and what not to use – including DeepSeek R1)

Storage equipment vendors can embed AI agents into their equipment through a new partnership called the Nvidia AI Data Platform. The partnership means equipment vendors may opt to include Blackwell GPUs in their equipment. Storage vendors Nvidia is working with include DDN, Dell, Hewlett Packard Enterprise, Hitachi Vantara, IBM, NetApp, Nutanix, Pure Storage, VAST Data, and WEKA. The first offerings from the vendors are expected to be available this month.

Nvidia said this is the largest GTC event to date, with 25,000 attendees expected in person and 300,000 online.

Want more stories about AI? Sign up for Innovation, our weekly newsletter.